Generate SPMetal

Even though SPMetal doesn't come into play for All Code deployments, (and nor could it: you'd get into a catch-22 paradox when trying to conceive using code generated from a structure to provision that very structure) it's still a crucial part of any data-intensive SharePoint 2013 project; without it, you are essentially building a 2007 site with a ribbon.

A Bit Of Background

I don't quite have enough to say about SPMetal to break such a discussion off into its own section, so we'll just chat about it "inline" with the rest of the deployment process. SPMetal, cursorily, is a command line tool that provides a "hint-and-generate" experience to creating a data model a la Entity Framework for your SharePoint site, with full Linq integration. This is Microsoft's answer to our cries for Linq-To-SharePoint.

Thanks Microsoft!

By "hint-and-generate" I mean that, sigh, you need to sling some XML to tell SPMetal how to generate your objects. There's no way around this: there's no designer and no API. You can run SPMetal blindly without any hinting XML, but the result is rarely as usable as code generated from a human-massaged XML file. The details of this markup and how to use it are outside the scope of this book; all we need to know is that we specify content types, site columns, and corresponding .NET class names in the XML file, and then run an SPMetal command to generate code we can put into our project. We'll see an example in a bit.

The problem with SharePoint as a pseudo-RDMS is that the schema of its tables (lists) are, by design, dynamic. Using string representations of display names of fields to pull data from an SPListItem object reminds me, with a shudder, of dealing with DataReaders back in my ADO.NET days. Barf. I love the fact that I've barely had to write a line of t-SQL or instantiate a single DataAdaptor since I let EF into my heart.

Since then, I've been greedily generating my entire business object layer and loving it for my conventional client/server applications. But with such a wonderful development experience for our ASP.NET / WPF / Silverlight projects, why were we still in the querying Stone Age for SharePoint? Well, that all changed when SharePoint 2010 first introduced SPMetal. It's like a time machine that beams our collaboration applications into modern times. I even love the technology's name: it's very descriptive of how your Linq objects are nestled into the rest of your code base.

Basically, like the steel girders that rigidly hold skyscrapers together, SPMetal welds your business objects into place. There's still the problem of anyone possessing designer or above permissions having the ability to break your code using only a mere few clicks. However, as soon as such a scenario occurs, you can restore your local environment from a production backup, regenerate your data layer, and get compilation errors wherever things changed pointing you to what you need to fix.

I think this metallic rigidity is a small price to pay for a Linq-ish development experience on a SharePoint project. Maybe in the future we'll have something a bit more malleable than SPMetal to work with (some future names I've come up with are SPClay, SPJello, and SPTemporpedicHyperFoam). But for now, SPMetal it is.

SPMetal Collaboration

Although it works pretty well during development, the hint-and-generate experience provides a few interesting scenarios when it comes to deployment and source control. A lot times you'll add some XML to your SPMetal designer file, save and regen, rebuild, and suddenly have hundreds of compilation errors. Unfortunately, one errant little typo in your SPMetal XML can break your entire data later. When this happens, the quickest triage is to pop into the command line and run an SPMetal generation against your model manually.

The only other complaint I've had is that SPMetal is simply still a fussy infant of a technology. The way I deal with first or second generations of such new technologies is to save often, and have a good attitude toward taking the time for artificial breaks in my day caused by having to cycle Visual Studio. Thinking back to my hair-thinning SharePoint 2003 development days, this is, like I said, a relatively small price to pay for finally getting Linq-To-SharePoint.

All that said, SPMetal does have some deployment ramifications to discuss, mainly around Visual Studio structure and TFS sharing. Like any other Deployment Driven Design paradigm, we need to plan our solution structure ahead of time to make this much easier for everyone on our team. So the first thing we need to do is forge some SPMetal!

Like any other data access layer, I like to organize my SPMetal code into its own Visual Studio project. That way, all of our SharePoint projects in the solution (as well as the data creator) can consume the site's schema in one centralized place. Also, our SPMetal logic can cleanly reference our Common project, giving it access to not only the Utilities methods, but more importantly our Constants class. This project will also contain partial classes, WCF services, and really anything else that would be used to serve up data from SharePoint to the UI.

Other than our XML file and the resulting generated C#, I like to wrap all data access calls to the underlying context in a static utilities class (usually called something like SPRepository). This way, as our schema updates, (and occasionally breaks) we don't have to rummage through our web parts to touch things up. Also, it gives us a single point of entry to do things like elevate permissions cleanly or swap in and out other application tiers like data caching or error logging.

Refactoring SPMetal into its own project also makes our TFS experience a little nicer. Using an offline mechanism to generate code based off the current state of a SharePoint site as it existed at a particular time in a particular isolated environment can easily lead to annoying (and even detrimental) synchronization issues among your team members. Here's an example of such a scenario:

- Tiffani adds a new content type and list to her All Code deployment script, runs it, and generates an updated SPMetal object model.

- She deploys her SharePoint solution.

- Like every good developer, she smoke tests her code in her local environment before checking it in.

- Amber, her team member, gets the latest, and rebuilds. Everything will properly recompile, since the code in the SPMetal project is in sync with the consuming SharePoint projects.

- However, when Amber deploys Tiffani's code into her dev environment, she'll get runtime errors: her structure doesn't match Tiffani's; columns won't exist or lists will be missing content types.

Now the right way for Amber to deal with this is either to be very diligent and blow-away-recreate her site collection every time there's a change to your SPMetal project, or at least have some chatter going on among with Tiffani. Since using TFS guarantees synchronization of your code base only and not your site's structure, you need to be communicating changes to the deployment script.

"Hey Tif, I merged two content types; make sure to re-gen next time you get the latest," Amber might say. Or Tiffani would Email the dev team or at least enter a specific check-in comment if her new code will introduce breaking changes to the build. Unless you wire your All Code deployment script into your continuous integration build server, keep up the chatter with your team.

The wrong way to approach SPMetal conflicts is to frantically regenerate your model when getting the latest causes regression issues. All that will occur is the reverting of other people's logic. You need to trust that your team will be checking in good code and that it's up to you to integrate it into your environment. So if getting the latest breaks your build or suddenly causes run time errors, take a deep breath and do the following before going postal on your colleagues:

- Blow-away-recreate your site collection. This is always a good first step; it's like the IISRESET of deployment. Keep SiteCollectionResetter.ps1 close by at all times.

- If you can compile, then simply deploy and run your script. This should sync the structure with the code base.

- If you can't compile, then you have to deal with the integration manually. Some activities to this end might entail:

- See if you can compile your SharePoint project only (as opposed to a rebuild all). If so, you can deploy your new structure, regen your SPMetal, and sync everything up.

- You might have to update some web part or other consuming logic to jive with the new SPMetal object model.

- If nothing else is panning out, see if anyone else on the team has the same issues or hasn't checked something in. (I know I talked about trusting your team earlier, but not to the extent that you waste half a day like a Visual Studio dog chasing a TFS parked car.)

- Once this is resolved, you can deploy and provision cleanly.

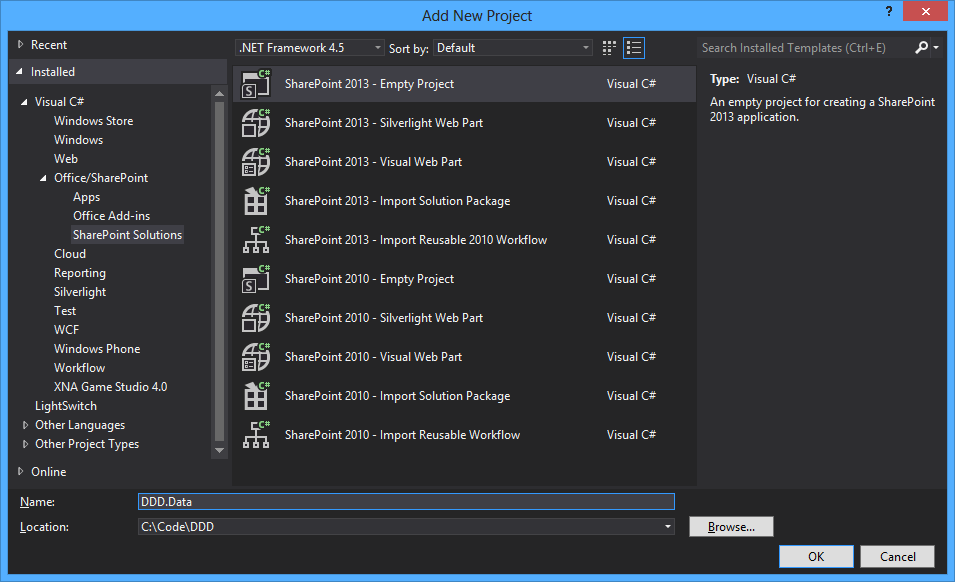

The Data Project

Let's generate a model. First, create a new Empty SharePoint project (I'm going to be calling it DDD.Data) and wire it to your local site collection. Add references to Microsoft.SharePoint and Microsoft.SharePoint.Linq (from Dependencies of course). Follow as many of the guidelines provided in the "The Code/The Web Part/Deployment" section as possible to stick to our solution conventions (such as the build output path). Also, update your "DoEverythinger" PowerShell script as follows (new lines are bolded):

Code Listing 56: DoEverythinger.ps1

- ...

- #deploy

- Write-Host;

- Write-Host ("Deploying Solutions") -ForegroundColor Magenta;

- $script = Join-Path $path "\SolutionDeployer.ps1";

- .$script -wsp DDD.Data.wsp;

- $script = Join-Path $path "\SolutionDeployer.ps1";

- .$script -wsp DDD.Web.wsp;

- $script = Join-Path $path "\SolutionDeployer.ps1";

- .$script -wsp DDD.WebParts.wsp -url $siteUrl;

- ...

I was pretty surprised to learn that Visual Studio 2012 and its SharePoint 2013 tools didn't ship with a built-in template for SPMetal definitions, so we'll have to do this oldskool. This includes a build event that calls SPMetal.exe (which is in 15\bin) and uses an XML file to mold the sculpture of the data layer it generates. Like I said, I was hoping for a template, or even a designer, to do the heavy lifting for us. Maybe in Visual Studio 20...I don't know...18 we'll get some love?

First, add a new XML file to DDD.Data named "Rollup.xml" and populate it with the following markup:

Code Listing 57: Rollup.xml

- <?xml version="1.0" encoding="utf-8"?>

- <Web xmlns="http://schemas.microsoft.com/SharePoint/2009/spmetal">

- <List Name="Pages" Member="Articles">

- <ContentType Name="Rollup Article" Class="Article" />

- </List>

- <ExcludeOtherLists />

- <ContentType Name="Rollup Article" Class="RollupArticle">

- <Column Name="Title" />

- <Column Name="RollupDate" />

- <Column Name="ExternalLink" />

- <Column Name="CategoryLookup" />

- <ExcludeOtherColumns />

- </ContentType>

- <ContentType Name="Rollup Category" Class="RollupCategory" />

- <ExcludeOtherContentTypes />

- </Web>

Next, we need to wire up the pre-build event to do the generation. We can't do post-build like we have for everything else because we need to make sure to compile the new code first. Go into the properties of DDD.Data, click the "Build Events" tab, and enter the following in the "Pre-build event command line" field exactly as I have it here:

cd..

cd\Program Files\Common Files\microsoft shared\Web Server Extensions\15\BIN

spmetal.exe /web:http://$(SolutionName).local/category/rollup

/namespace:$(ProjectName) /code:"$(ProjectDir)RollupEntities.cs"

/parameters:"$(ProjectDir)Rollup.xml"

Like I said, SPMetal is not the subject of this book, so I won't be going into detail about the above mess. The only thing to note, however, is the URL in the "web" switch. Each SPMetal context only deals with a particular SPWeb, so use this URL to specify which one. You can add as many XML files as you want to gen as many SPMetal contexts as you need – one for each sub site if necessary. Just add a new XML file to your project, and specify it at the end of the "parameters" switch. You can even add multiple SPMetal commands if you prefer.

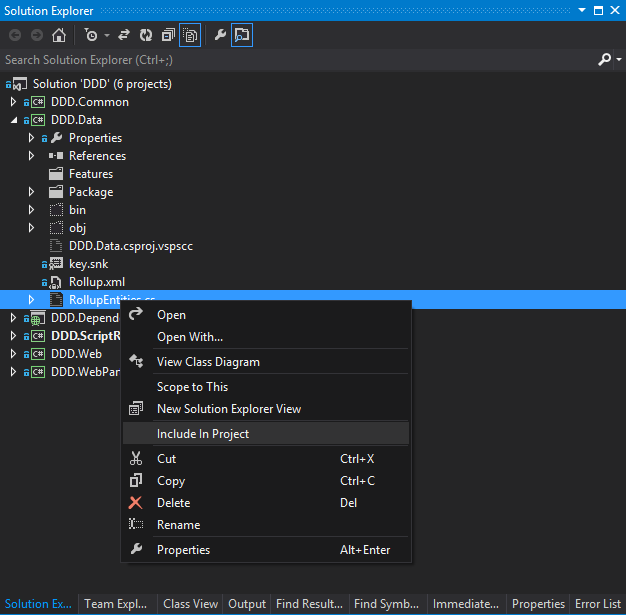

After the build, the file specified at the end of the "code" switch will be outputted directly into DDD.Data. Manually add it the first time.

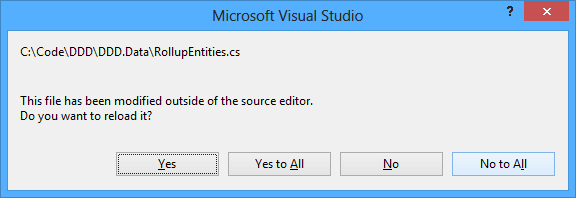

After every subsequent build, you'll be reminded that your file has been regened:

You can either REM this out when it's not going to change for a while, or simply keep the file closed. But don't leave it open, like someone in a car ignoring the annoying beep that sounds when they don't buckle their seat belt, because the new bits won't load and the previous version of the file will be built. I recommend rebuilding early and often to make sure everything compiles, but them REM-ing it out when you move over to web part development. Besides, we shouldn't be handing out in designer code files anyway. As we saw with Amber and Tiffani, we can get into trouble if we don't keep our SPMetal data layer synced up with what Structure is provisioning.

One thing I like to add (as a nice example of a few different architectural goodies) is a partial class to my base content type, which, in a publishing paradigm, is almost always the "Page" class in my entities. (From the All Code deployment of our Structure feature, you should see that "RollupArticle" inherits from "Page.")

Do an "Add | Class..." on DDD.Data and name it "Page." Next, change its access modifier to "public" and add the "partial" keyword. Like I said, think Entity Framework when you work with SPMetal: all of the generated classes are ready for partials, polymorphism, databinding, and everything else we'd expect from a modern-day ORM.

What is this class going to do? One thing noticeably missing from the base objects that SPMetal generates is a relative URL for our pages. This partial will inject a read-only property that will allow our base page, and anything derived from it, to be aware of its URL. The following code is all you need to see to understand how powerful SPMetal is as a true data access layer:

Code Listing 58: Page.cs

- namespace DDD.Data

- {

- public partial class Page

- {

- #region Properties

- public string RelativeURL

- {

- get

- {

- return string.Format("{0}/{1}", this.Path, this.Name);

- }

- }

- #endregion

- }

- }

Another good usage of partial classes is to finesse what is pooped out of SPmetal's code generation engine. As example of this is our RollupDate column, which is gened as a nullable DateTime. This is not at all a poorly-generated type, but it will require a null check and inevitable ToShortDateString formatting to be pervasively useful. I prefer dealing with these kinds of situations via another partial class called "RollupArticle" (to match the name of content type from the XML file):

Code Listing 59: RollupArticle.cs

- namespace DDD.Data

- {

- public partial class RollupArticle

- {

- #region Properties

- public string Date

- {

- get

- {

- //null check

- if (this.RollupDate.HasValue)

- return this.RollupDate.Value.ToShortDateString();

- else

- return "N/A";

- }

- }

- #endregion

- }

- }

Not only can we refactor the nullable type's "HasValue" property into a single place, but we can also throw some business logic here as well. I like having the DateTime object proper at my disposal to do any time calculations, but when it comes to displaying it on the UI, I almost always just want to quick string representation of it. By using partials, I can have a "display mode" concept of my properties, without disrupting their original values.

Another little tweak I usually make is to turn object tracking off by default whenever a new context is spun up. This is a performance enhancement from EF, and it works all the same in SPMetal. Object tracking is required if you are going to be executing any update operations against the context; for reads, however, it just gets in the way. Add another class named "RollupEntitiesDataContext" (or <whatever>DataContext where "whatever" is file name in the "code" switch of the pre-build command. Here's what my "RollupEntitiesDataContext.cs" file looks like:

Code Listing 60: RollupEntitiesDataContext.cs

- using System;

- using Microsoft.SharePoint;

- namespace DDD.Data

- {

- public partial class RollupEntitiesDataContext

- {

- #region Initialization

- public EntitiesContext() : this(SPContext.Current.Web.Url)

- {

- //use this default constructor for READS...explicitly set context.ObjectTrackingEnabled to true for UPDATES

- }

- #endregion

- #region Events

- partial void OnCreated()

- {

- //by default we turn OFF object tracking for performance

- this.ObjectTrackingEnabled = false;

- }

- #endregion

- }

- }

Finally, let's build our repository class to wrap our SPMetal into neat and tidy data access methods. Once again, the data access layer isn't the focus of this book, but if I'm going to present a front-to-back overview of an All Code SharePoint deployment, I want to flush out all of the components that are part of the package. So add two more classes to DDD.Data and name them "SPRespository" (for the data access class) and "ArticleModel" (for our model).

Code Listing 61: SPRepository.cs

- using System;

- using System.Linq;

- using Microsoft.SharePoint;

- using System.Collections.Generic;

- namespace DDD.Data

- {

- public static class SPRepositry

- {

- #region Public Methods

- public static List<ArticleModel> GetAllArticles()

- {

- //new up context

- using (RollupEntitiesDataContext context = new RollupEntitiesDataContext(string.Concat(SPContext.Current.Site.Url, "/category/rollup")))

- {

- //run query

- return context.Articles.ToList().Select(a => new ArticleModel()

- {

- //assemble model

- Date = a.Date,

- Title = a.Title,

- Url = a.RelativeURL,

- Author = a.DocumentCreatedBy,

- Category = a.CategoryLookupTitle

- }).ToList();

- }

- }

- #endregion

- }

- }

Line #13 news up the SPMetal context, and uses the constructor overload that "scopes" it to a particular web via its URL. The code won't bomb if you don't do this; I will simply return nothing. In Line #16, I use the "Select" method to convert the raw SPMetal objects into my fully-cooked models. Also, note the first "ToList" call in this line. This method "materializes" the query, essentially loading the data right then and there. If you don't call a materialization method and use a disposal pattern, you'll sever the connection, and any future lazy-loaded property will fail, saying that the context has been disposed of.

In general, these repository methods don't take many inputs, and return models to our UI ready for databinding. Newing up an SPMetal context alone doesn't really necessitate a wrapper method; it's the setting of the context, the conversion of the return objects, and the Linq itself that are refactored here. This also opens up the magical abstraction door: in the future, if SPMetal fails you, you can swap the specific repository method with pure SharePoint API / CAML queries, and your UI is none the wiser...just faster.

Next, here's out model:

Code Listing 62: ArticleModel.cs

- using System;

- namespace DDD.Data

- {

- public class ArticleModel

- {

- #region Properties

- public string Url { get; set; }

- public string Date { get; set; }

- public string Title { get; set; }

- public string Author { get; set; }

- public string Category { get; set; }

- #endregion

- }

- }

As I've been stating and restating in this section, I really like to treat SPMetal like Entity Framework. Models (in MVC or "POCOs" – Plain Old CLR Objects – in .NET) is a best practice in modern software architecture to abstract your data as far away from your logic as possible. This is especially helpful when we have generated data access layers and can't be guaranteed what we'll get.

Even through SPMetal lets us specify some of the class names by using the "Class" or "Member" attributes in the parameters XML file, I still like to go the extra mile here. Linq objects are heavy; they carry implementations of "INotifyPropertyChanged" and "ITrackEntityState" (to name a few) and are a lot to pass around, especially when all our UI needs are the property values. It might seem like double work to type out a POCO class, but it can actually make your code a lot easier and cleaner.

SPMetal Deployment

That's it for SPMetal! Publish DDD.Data to DDD.Common\Deployment, and update any other PowerShell scripts (we've already done DoEverythinger.ps1) that might need to operate on this WSP. The beauty of SharePoint projects with no deployable assets (other than their DLL) is that we can deploy them very easily – especially through Visual Studio directly.

In fact, there's an even faster way (assuming, again, we'll continue to only have the DLL be deployed, not any features or file in _layouts) to get our data layer deployed. Since all we have to deal with is an assembly by way of project output, we can use DDD.WebParts to do the dirty work for us. First, add a normal Visual Studio project reference to your SharePoint code so you dev against it for the UI (more on this in the next section).

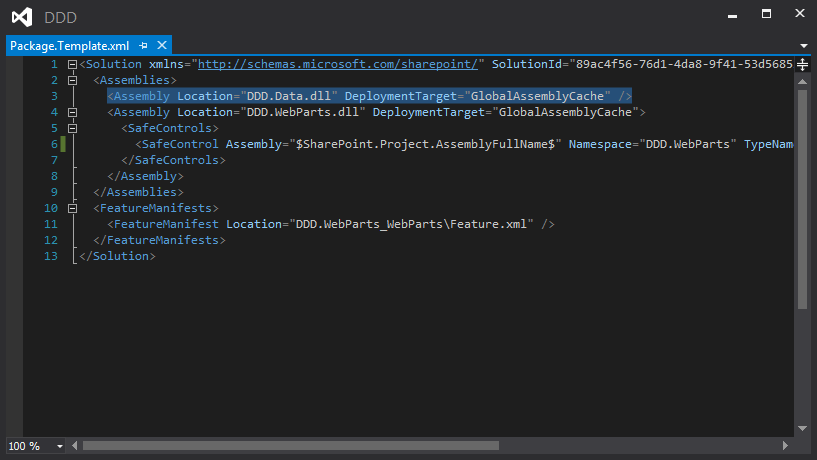

Then, go into the package designer of your SharePoint project, and add your data layer as a GAC'd assembly. If you've overridden the generated XML for your WSP, you'll have to this manually, as shown below. Fortunately, this is very straight forward: all we need to know is the file name of the assembly, and where it needs to go.

This way, right-click-deploy will suck in our SPMetal code and ensure that the latest version of it will always be available in the GAC. When deploying to production (or any server without Visual Studio) the WSP file will know what to do. So if you treat SPMetal the same as you would Linq-To-Sql or the Entity Framework during development, deployment will be just as trivial.