The PowerShell

So now we've got everything: SharePoint structure (site columns, content types, lists, a pages library, etc.), a master page and a page layouts, a web part, and an actual page. The next step is to create the script that, when run, blows away our development site collection, creates a new one, and activates our feature. When the feature activation code has run to completion, we have a fully functional site with everything we need to test our web part.

Now that our deployment feature is ready to be installed and activated, the next thing we need to do is write the scripts that automate this procedure. I create a folder called "Deployment" in my Common project and deed this as the home to all my PowerShell tenets. In the following section, we'll review the different scripts used to automate both development and production deployments.

I like to think of PowerShell as a mini .NET SharePoint interpreter with some commandlet helpers built in: a place where I can run arbitrary SharePoint code that doesn't necessarily make sense in the context of either deployment logic or application logic. It's a scribble pad, and therefore I'll be a bit capricious switching between commandlets and .NET invocations.

In this section, we'll first go through and discuss the "tactical" scripts that automate a single deployment function, which include things like activating a feature, adding a solution, warming up a server, etc. Then we'll get into the aggregation of scripts into "master" ones that orchestrate several functions, sort of like a program would. These master scripts act as the single command administrators / developers will run in certain scenarios, such as rebuilding the development environment or pushing to production.

Site Collection Resetter

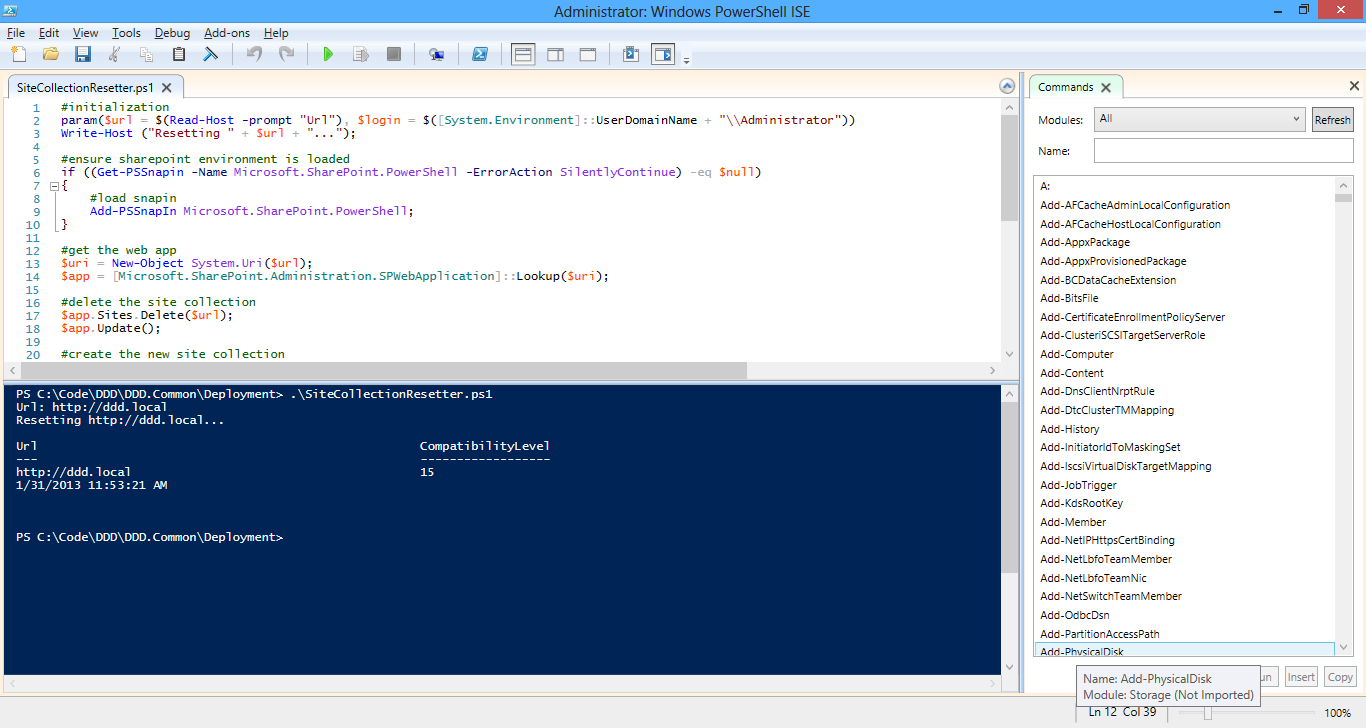

First, let's look at the site collection resetter script, called, intuitively enough, "SiteCollectionResetter." This gives us a clean site collection before activating our All Code deployment feature:

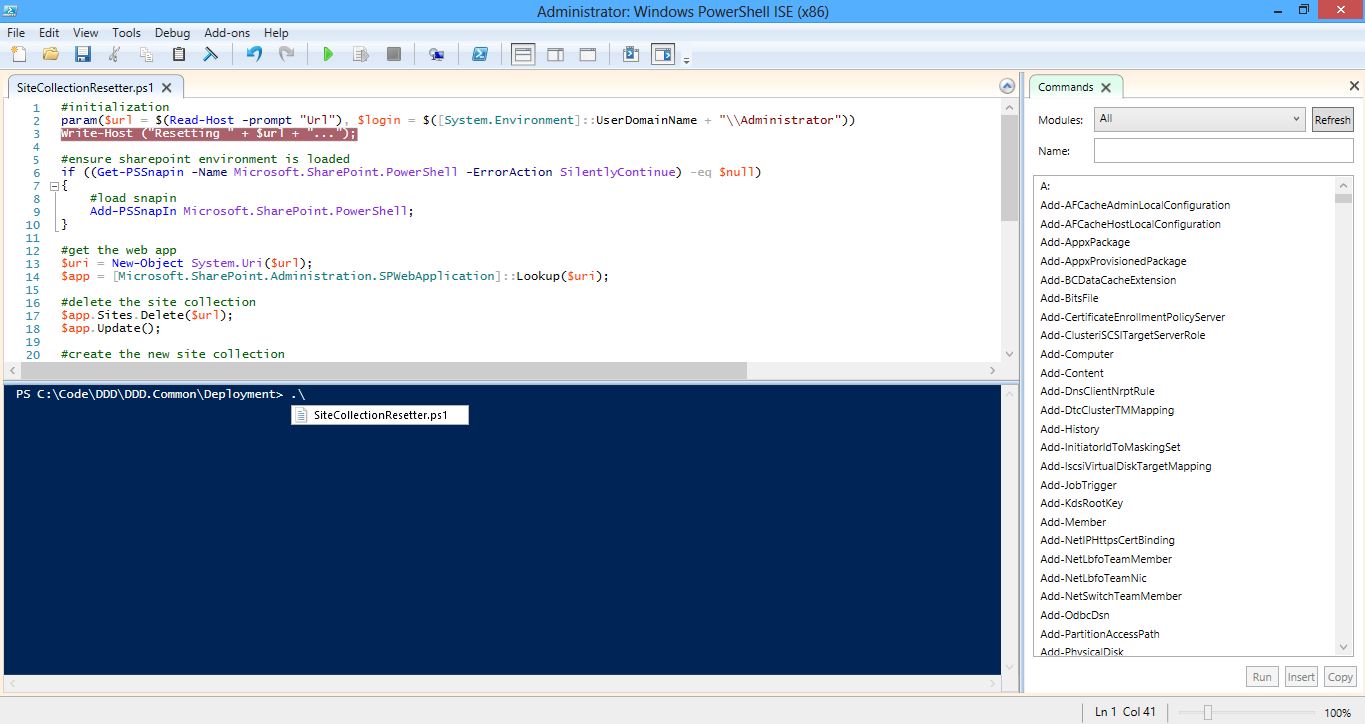

Code Listing 36: SiteCollectionResetter.ps1

- #initialization

- param($url = $(Read-Host -prompt "Url"), $login = $([System.Environment]::UserDomainName + "\Administrator"))

- Write-Host ("Resetting " + $url + "...");

- #ensure sharepoint

- if ((Get-PSSnapin -Name Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapIn Microsoft.SharePoint.PowerShell;

- }

- #delete the existing site collection

- Remove-SPSite -Identity $url -Confirm:$false

- #get the web app

- $uri = New-Object System.Uri($url);

- $app = [Microsoft.SharePoint.Administration.SPWebApplication]::Lookup($uri);

- #clear mods

- $app.WebConfigModifications.Clear()

- $app.Update();

- #create the new site collection

- New-SPSite -Url $url -Name "DDD" -OwnerAlias $login -Template "BLANKINTERNETCONTAINER#0";

- #done

- Write-Host ([System.DateTime]::Now.ToString());

Like I said, my PowerShell scripts look like a love child had between .NET and PowerShell commandlets. For example, Line #11 uses the built-in SharePoint commandlet to remove the existing site collection, but Line #'s 13-17 call into the SharePoint API to clear the web application's web.config mods. Basically, I'm doing what's easiest: commandlets to deal with site collections and .NET to deal with web apps.

Also, in Line #2, I use the "param" command to define the parameters of the script. In this way, you can think of each script as a method that does one specific thing. In the param command, you can specify whether your named parameters are optional, should be prompted for values, or have a default value. I find this to be a lot cleaner than dealing with the built-in "$args" array, which forces you to have to read your values in by blindly accessing it with hard coded indices.

Finally, I dump out the timestamp at the end because this code takes a bit to run. I like keeping the SharePoint PowerShell Management Console open and pointing to this script. When I run into an issue, I press the up arrow and then enter to kick it off, and then flip back into Visual Studio to fix the problem. But by the time I'm ready to redeploy, I always forget if I already ran the reset script; the timestamp usually jogs my memory.

Script Editing

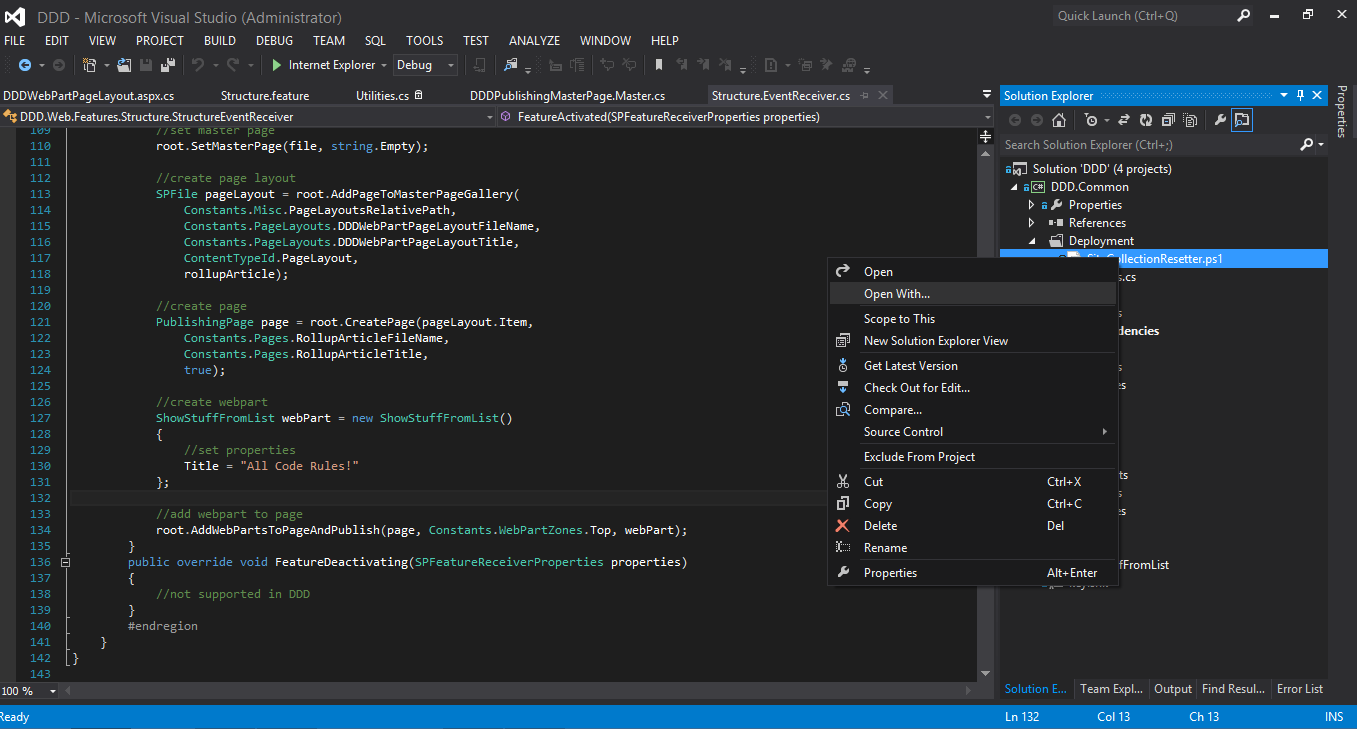

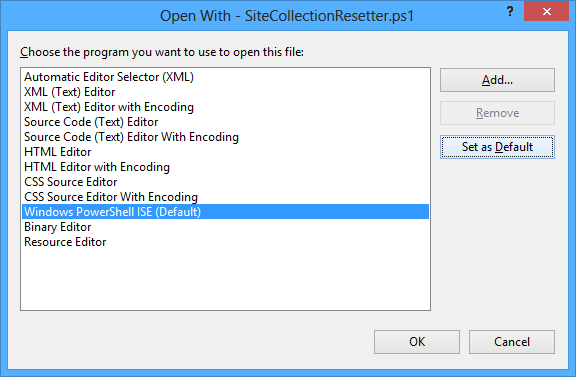

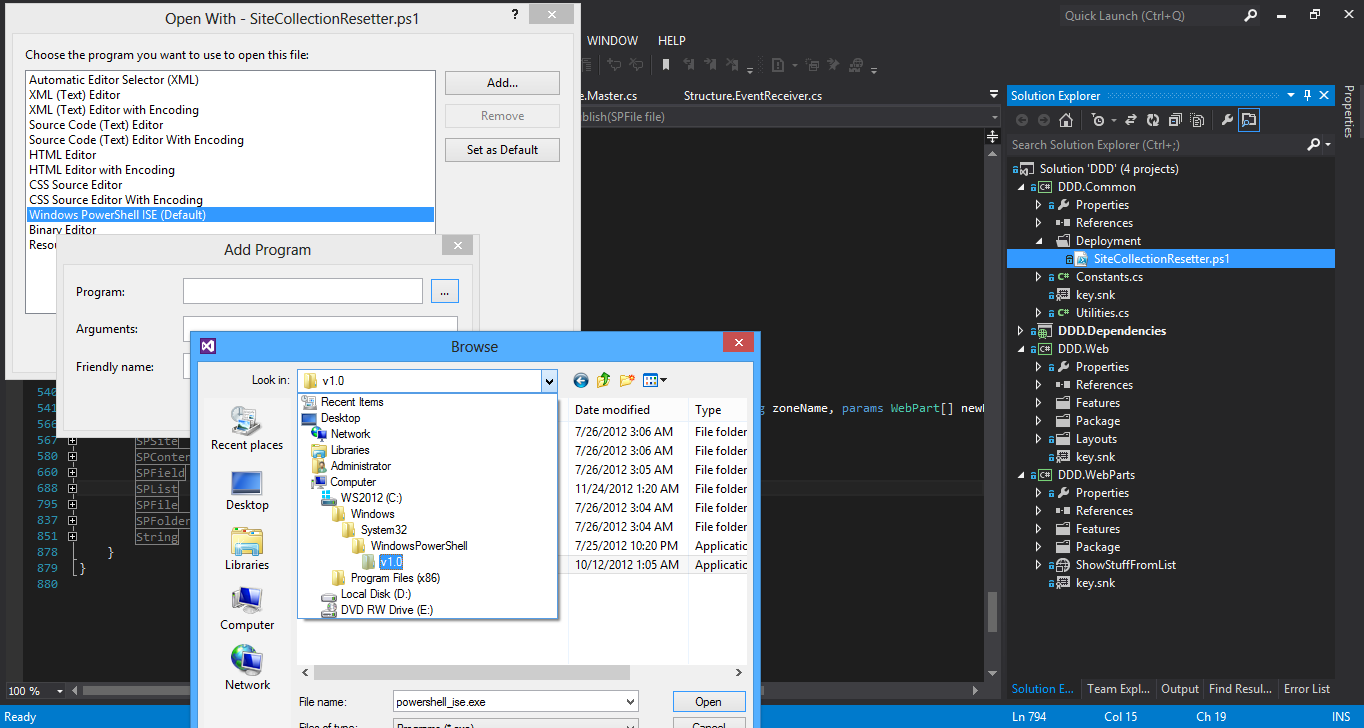

Visual Studio 2012, out of the box, still doesn't have IntelliSense for ps1 files. Add SiteCollectionResetter.ps1 to DDD.Common\Deployment as a new item. Then right click the file, and select "Open With..." In the dialog that pops up, highlight "Windows PowerShell ISE" and then click on "Set as Default." From this time and every time after, double clicking the file in Solution Explorer (and any future PS1's) will open it in ISE, which, on Windows Server 2012, provides the experience we're used to with IntelliSense in our code files.

In this IDE, we can also set breakpoints and run scripts directly. One extra step, however, is that we need to manually import the SharePoint snapin that the SharePoint 2013 Management Shell does for us automatically. That's what Line #'s 5-10 do above (with a check to make us not have to wait for it to load if it already has been). Use the bottom pane to execute our script (we even get an IntelliSense-like experience and normal copy/paste functionality here in Windows Server 2012 as well).

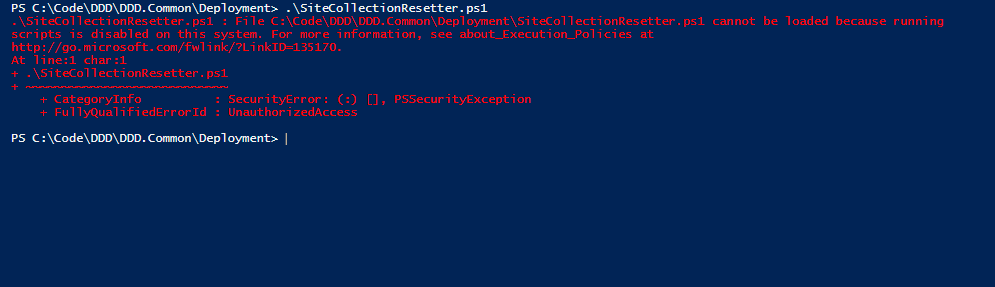

But then this happens:

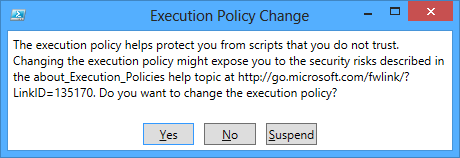

This is easily fixed by running the following command (which I recommend for development environments only; follow the link in the error message for the best configuration for your production servers): "Set-ExecutionPolicy -ExecutionPolicy Unrestricted -Scope LocalMachine" and then clicking yes on the popup that's shown:

Now our scripts can run free. Let's give it a shot!

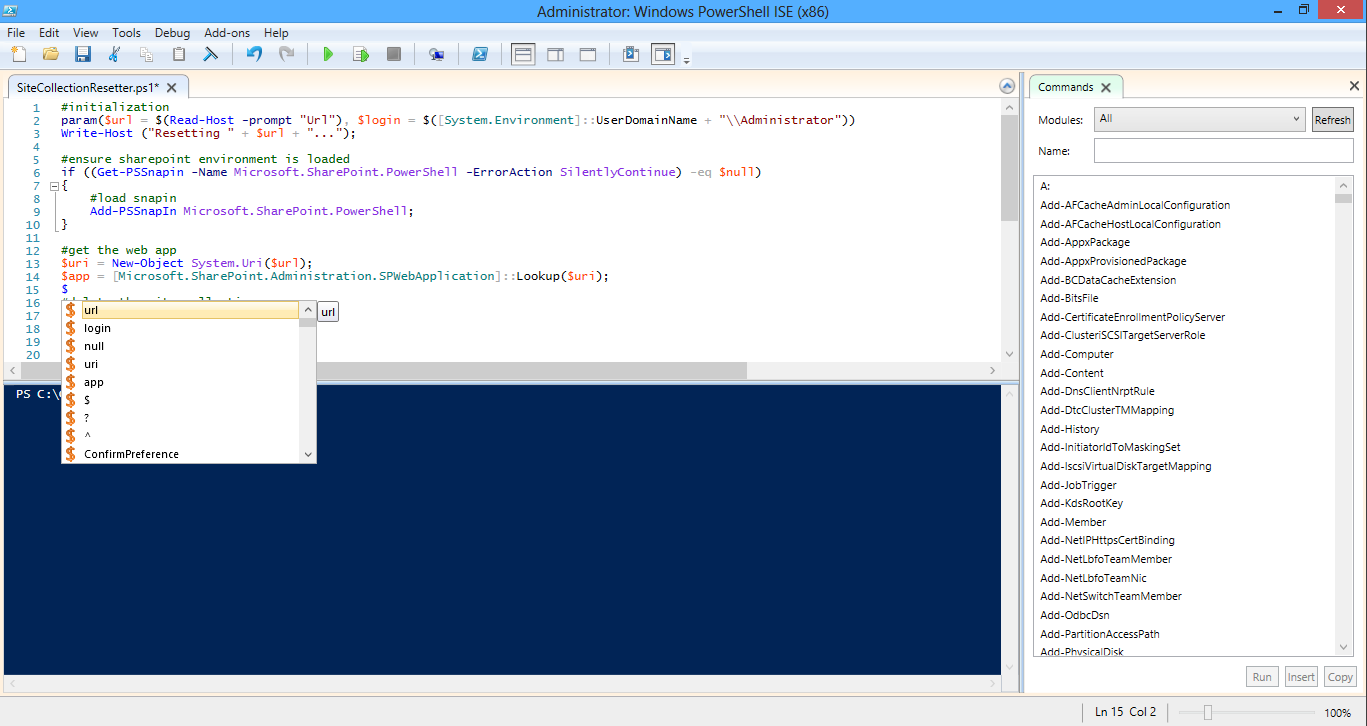

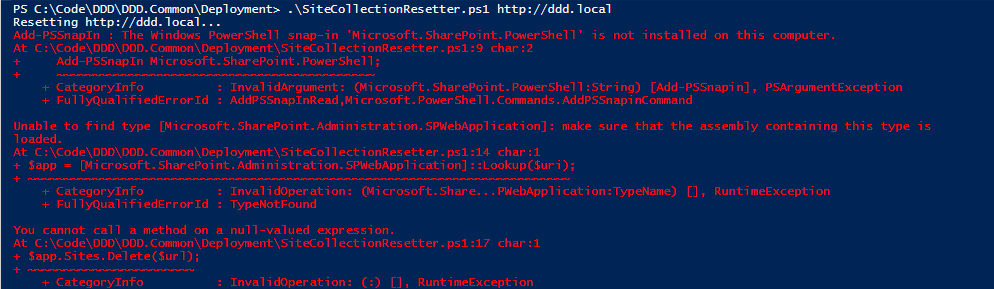

Microsoft PowerShell's not installed?? What? Turns out, it's an x86 vs. x64 issue. SharePoint is 64 bit only, so the DLLs won't load in a 32 bit process. All we have to do is run PSE in 64 bit mode. To do this, right click on our SiteCollectionResetter.ps1 file in Solution Explorer, and select "Open With..." again. But this time, on the popup, click "Add." Then, on the "Add Program" dialog, click on the Ellipse.

I ran into a potential show shopper here. On Windows Server 2012, x64 PowerShell is located at "C:\Windows\System32\WindowsPowerShell\v1.0" (the path in the screen shot above that should work) while its x86 little brother is in "C:\Windows\SysWOW64\WindowsPowerShell\v1.0." The issue was that both seemed to load the x86 version, where SharePoint 2013 DLLs fail to load.

So I did a search for "PowerShell_ISE.exe" and a bunch more beyond the standard two from above showed up in really weird paths. The one that worked for me was "C:\Windows\WinSxS\amd64_microsoft-windows-gpowershell-exe_31bf3856ad364e35_6.2.9200.16434_none_9190d8892b50c55e." Enter this (or whatever you find that works) in the "Browse" popup's "file name" field, hit enter, and select "powershell_ise.exe." Then click "OK" on "Add Program" and, back on the original "Open With – SiteCollectionResetter.ps1" dialog, click "Set as Default" and then "OK." This will launch the proper Windows PowerShell ISE and everything should finally work!

This is of course optional. If you don't mind operating in Visual Studio, or have found some plugin that works for you, go for it. If it weren't for the fact that saving changes to your script in ISE automatically checks out the file in Visual Studio (if you're working with TFS). If I had to deal with that manually, then I would have stayed in the IDE. Not that we have our process in place to edit out scripts, let's starting diving into the rest of our PowerShell-driven deployments!

Feature Activator

I mentioned previously that I like the idea of a hidden event receiver. This allows us to take advantage of the scope-ability of SharePoint feature-driven deployments, while circumventing the fact that deployment is not really the intended paradigm of a SharePoint feature. The problem is that if the feature is hidden, we can't activate it in the UI. This forces us to have to script it, which is nice, as that makes our dev cycles even faster with one less step. Here's the deployment process for hidden All Code features.

[Note: I am discussing hidden features as an optional approach, rather than the recommended, because I'm not quite positive this is the way to go. My main fear is, although it's one less thing for administrators to have to worry about, (and indeed worry about screwing up as in the accidental deactivation scenario I described earlier) I'm just a bit leery about hidden functionality.

Even though it'll be documented and scripted, I'm afraid it'll get lost in the mix (especially since no one reads documentation or touches a script they weren't directly involved with). "Where did this content type come from?" would be a valid question for an administrator to ask me three years after I roll off a project. I just like seeing it there in the list of features. I like being able to control its destiny in the site settings if I had to.

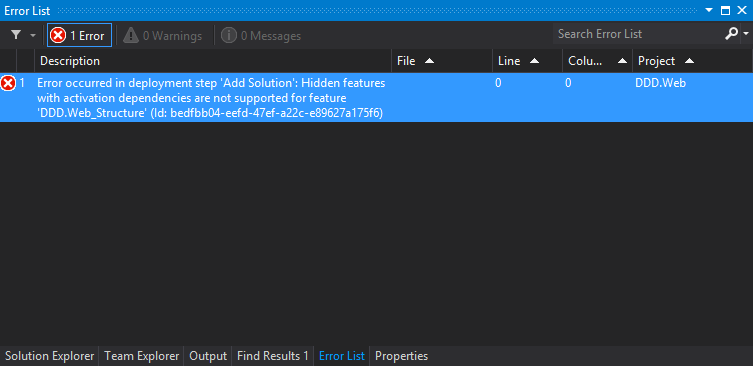

One additional thing to note if you consider using hidden features is that they do not support feature activation dependencies. This sort of makes sense; as I said before, my biggest hesitation to hidden features is that, as time passes after a final production deployment, we can simply lose track of where certain functionality or structure came from. So if you see the following Visual Studio error, you need to either move away from activation dependencies or hidden features.

There is no "correct" answer here; it's up to you; you just can't do both!

Writing scripts to automatically activate features makes sense for production deployments, but what about development ones, when you can take advantage of all the power of having Visual Studio plugged into a SharePoint environment? It might seem like I'm re-inventing the wheel a bit here, but it gives you more control than the automated process.

So like I said, there are a lot of pros to using this approach; let's take a look. First, we need to mark the feature as hidden. Open Structure.Feature.xml and add a "Hidden" attribute to the "Feature" element with its value set to "true." (Yes, that's some manual XML...sorry: it's not completely unavoidable in a SharePoint 2013 project.)

Code Listing 37: Structure.Template.xml

- <?xml version="1.0"?>

- <Feature xmlns="http://schemas.microsoft.com/sharepoint/" Hidden="true" ActivateOnDefault="false" AutoActivateInCentralAdmin="true">

- </Feature>

Of course, you could configure this to happen manually back in the "SharePoint Project Properties" section, but having this broken out into its own script will be useful to have for production deployments when we won't have Visual Studio to do the grunt work for us. Besides, it's a bit onerous to have to keep switching the Activate Deployment Configuration in the project settings and the order of the features in the Package Designer for different scenarios.

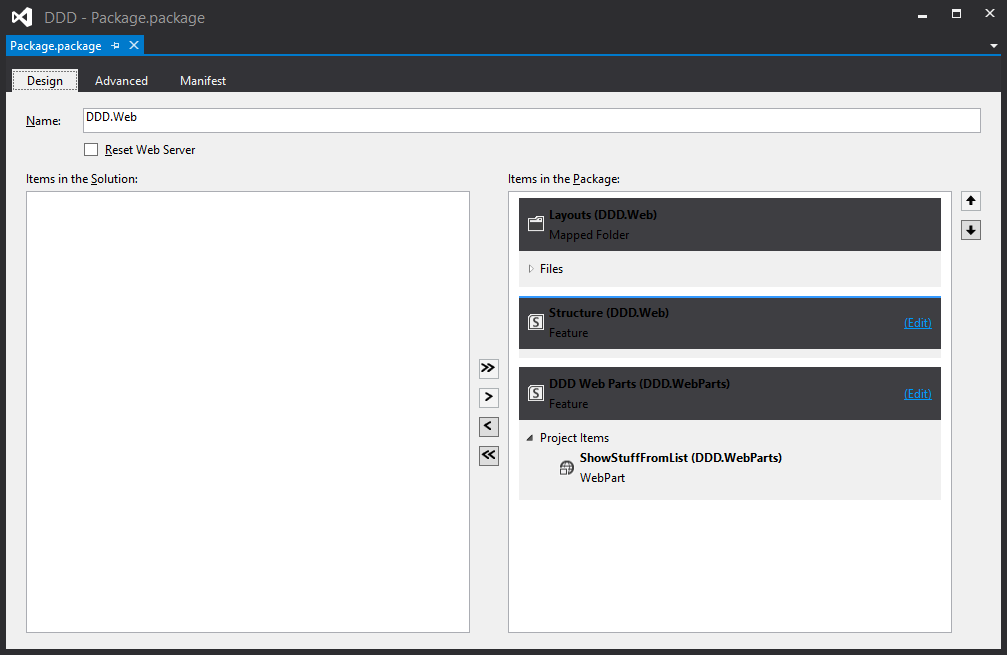

This is especially true if you have feature activation dependencies; Visual Studio doesn't detect these; it will activate the features in the order they are listed. In the screen shot below, Structure will be activated before Web Parts, even if it has an activation dependency that makes this ordering illogical. This is another case where if the tool fails in these edge cases, I want to control it myself.

Additionally, remember that Deployment Driven Design takes into heavy consideration the fact that we are more likely than not working in a team environment. Certain settings are "part" of the deployment (anything in the Package or Feature Designers) and can be checked into TFS. However, since other configurations are part of the Visual Studio project or solution properties, they might not necessarily go up and down cleanly to and from TFS. Having explicit scripts for different deployment tasks makes collaboration across your development team and administrative documentation much easier.

So whether or not you go with a hidden feature or make heavy use of the Packaging Designer, here's the second script in our arsenal: FeatureActivator.ps1. This bad boy defines the list of features that will be installed for a solution. The name "FeatureActivator" is generic for this book. In a real world scenario, multiple scripts serving this purpose could be called "ActivateUIFeatures" or "SearchFeatureActivator." The paradigm is the same: for the context of this deployment, install these features.

Code Listing 38: FeatureActivator.ps1

- #initialization

- param($url = $(Read-Host -prompt "Url"), $path = $(Split-Path -Parent $MyInvocation.MyCommand.Path))

- #ensure sharepoint

- if((Get-PSSnapin Microsoft.Sharepoint.Powershell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapin Microsoft.SharePoint.Powershell;

- }

- #warm up site

- $script = Join-Path $path "\SiteWarmerUpper.ps1";

- .$script -Url $url;

- #get feature guids

- [System.Reflection.Assembly]::LoadWithPartialName("DDD.Common");

- Write-Host;

- $webparts = [DDD.Common.Constants+Features]::WebParts;

- $structure = [DDD.Common.Constants+Features]::Structure;

- #activate webparts (on site collection)

- Write-Host;

- Write-Host ("Activating Webparts (on the site collection)...") -ForegroundColor Magenta;

- $script = Join-Path $path "\FeatureEnsureer.ps1";

- .$script -Url $url -Id $webparts -Scope "site";

- #activate structure (on the site collection)

- Write-Host;

- Write-Host ("Activating Structure (on the site collection)...") -ForegroundColor Magenta;

- $script = Join-Path $path "\FeatureEnsureer.ps1";

- .$script -Url $url -Id $structure -Scope "site";

In Line #'s 21 and 26 I call the "FeatureEnsureer" script (which we'll look at next) that actually does the work of installing (or re-installing) the feature. FeatureActivator's only job is to queue up FeatureEnsureer scripts and pass them the proper parameters. Line #'s 13, 15, and 16 use reflection to pull the feature guids out of our Constants class and use them to identity the features. This lets us maintain Deployment Driven Design's "no string comparison" policy.

We also kick off the "SiteWarmerUpper" script from here; we'll get to that later. Whenever we see a script call another script in an All Code deployment, we use the "dot" notation. This kicks off the "child" script in a separate PowerShell process, which helps combat the stale DLL issue we fall victim to (where a PowerShell instance will cache its loaded DLLs, allowing the potential of it not picking up newly-GAC'd code).

Feature Ensureer

Like I said, this script actually does the work of installing the feature. Here's what it looks like:

Code Listing 39: FeatureEnsureer.ps1

- #initialization

- param($url = $(Read-Host -Prompt "Url"), $id = $(Read-Host -Prompt "Feature GUID"), $scope = $(Read-Host -Prompt "Scope"))

- $ConfirmPreference = "None";

- $feature = $null;

- #ensure sharepoint

- if((Get-PSSnapin Microsoft.Sharepoint.Powershell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapin Microsoft.SharePoint.Powershell;

- }

- #get feature

- switch($scope)

- {

- "web"

- {

- $feature = Get-SPFeature -Web $url | where { $_.Id -eq $id };

- }

- "farm"

- {

- $feature = Get-SPFeature -Farm $url | where { $_.Id -eq $id };

- }

- "site"

- {

- $feature = Get-SPFeature -Site $url | where { $_.Id -eq $id };

- }

- "webapplication"

- {

- $feature = Get-SPFeature -WebApplication $url | where { $_.Id -eq $id };

- }

- }

- #check if we need to disable first

- if ($feature -ne $null)

- {

- #disable feature

- Disable-SPFeature -Url $url -Identity $id -Force;

- }

- #enable feature

- Enable-SPFeature -Url $url -Identity $id -Force;

All this guy does is consider the passed-in scope of the feature, use that to grab it by its unique identifier, deactivate it if it exists, and then finally (re)enable it. Line #3 is interesting; it's setting the "global" PowerShell "ConfirmPreference" to "None." This is tantamount to setting the "–Confirm" parameter to any commandlet that accepts it to "false." Otherwise, these commands will prompt the user for assurance to continue, which is of course bad news for automated deployments as it will cause them to hang.

I've also hard-coded in the "Force" switch parameter, which means it'll reactivate already-activated features. This is important to understand: redeploying a solution will deactivate any web application-scoped features, but leaves any site collection ones alone. If you don't force, you'll get an error saying that the feature is already activated, which is another form of deployment limbo. So either force, or explicitly deactivate before you redeploy.

Solution Deployer

Building a script that takes a WSP file and adds it to the SharePoint solution store and deploys it to the farm is a something that actually got a bit more involved starting in 2010. In 2007, getting the WSP created was a monstrous undertaking; once you got there though, you only had to string three little STSADM commands together ("stsadm –o" and switches are omitted below):

- addsolution

- deploysolution

- execadmsvcjobs

It's the last one that causes problems in 2010 (and now 2013 as well) in PowerShell. STSADM is still supported, but has been deprecated in favor of PowerShell (which is where the extra "involvement" I mentioned earlier comes from; as tempting as it is to just dust off our old 2007 batch files, we won't be living in the past in this book). Since solution deployment creates a job that does its work asynchronously instead of immediately kicking off a synchronous operation, "execadmsvcjobs" allowed our scripts to "wait" for these jobs to run, giving their asynchronous command calls a synchronous feel.

Well, there's nothing like that in PowerShell. (Not exactly true; there is "Start-SPAdminJob" but it doesn't work if we have the SharePoint Administration service running, which we need it to be.) Instead, we need to use the SharePoint commandlets to "poll" the status of the job that is deploying our solution, and when we determine that it's done, continue on with the script. Even though this is more involved, I like it better: I'd rather explicitly wait for a particular job to complete, rather run some cryptic command that could be doing more than exactly what I intended.

Let's take a look:

Code Listing 40: SolutionDeployer.ps1

- #initialization

- param($url, $path = $(Split-Path -Parent $MyInvocation.MyCommand.Path), $wsp = $(Read-Host -Prompt "WSP Filename"))

- $ConfirmPreference = "None";

- #ensure sharepoint

- if ((Get-PSSnapin -Name Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapIn Microsoft.SharePoint.PowerShell;

- }

- #fix wsp

- if ($wsp.Contains("\"))

- {

- #remove and sub-path from file name and add it to the file path

- $wspPath = $wsp.Substring(0, $wsp.LastIndexOf("\") + 1);

- $wsp = $wsp.Replace($wspPath, "");

- $path = Join-Path $path $wspPath;

- }

- #check if solution exists

- if ((Get-SPSolution | where { $_.Name -eq $wsp }) -ne $null)

- {

- #retract first

- $script = Join-Path $path "\SolutionRetractor.ps1";

- .$script -url $url -Path $path -wsp $wsp;

- }

- else

- {

- #not found

- Write-Host ($wsp + " was not found; skipping retraction.") -ForegroundColor Yellow;

- }

- #add solution

- Write-Host;

- Write-Host ("Adding " + $wsp + "...");

- Add-SPSolution (Join-Path $path $wsp);

- #deploy solution

- Write-Host;

- Write-Host ("Installing " + $wsp + "...");

- #if a url was passed in, we need to force web app deploy

- if ($url -ne $null)

- {

- #web app deployment

- $app = Get-SPWebApplication | where { $_.Url -like $url + "*" };

- Install-SPSolution -Identity $wsp -Force -GACDeployment -WebApplication $app;

- }

- else

- {

- #normal deployment

- Install-SPSolution -Identity $wsp -Force -GACDeployment;

- }

- #force job

- $script = Join-Path $path "\Execadmsvcjobs.ps1";

- .$script;

Note that the URL parameter is optional. This script uses it to determine if the solution should be deployed to a particular web application, which is required if you have web app-scoped assets (such as SafeControls in the WSP manifest). What's interesting is that having web-scoped features does not require this if your feature doesn't deploy any assets (i.e. is simply a host for a feature receiver).

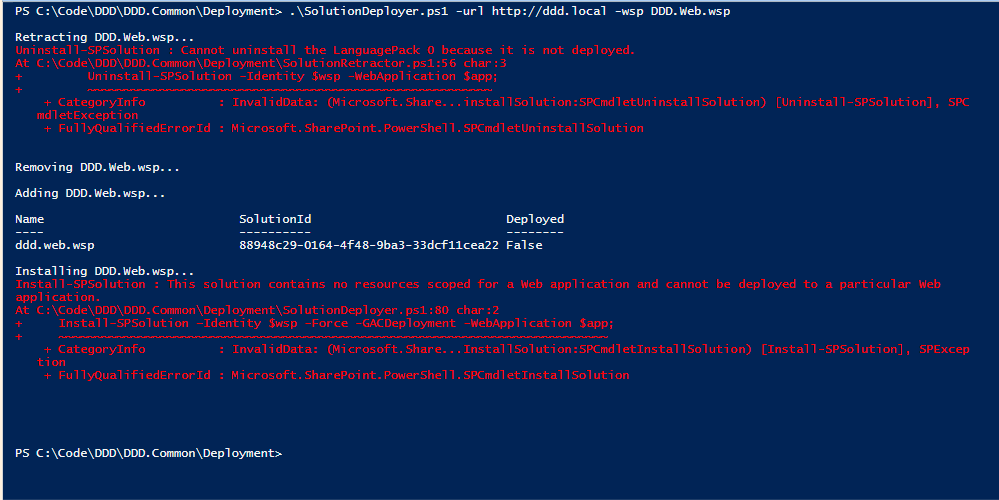

I usually determine this by observing my script barfing the first time it's run:

I purposely caused this red ink to appear. The first error seems to crop up occasionally when previous un/re-installations of solutions fail. It can safely be ignored. The second occurs when we specify the URL parameter for an aforementioned WSP that has no web-scoped resources. As you'll see later in our wrapper Scripts, we'll have a nice way to clearly tell the WSP's how to install themselves.

Line #49 calls another script that implements the STSADM "execadmsvcjobs" command (which I adorably named the script homage to this command). The shell of this logic was originally lifted from http://msdn.microsoft.com/en-us/library/ff459292.aspx and has gone through several rounds of OCD from being a part of various projects I've been on to land here in its final form. Basically it keeps looping as long as a deployment job exists. I'll list the code next.

The stanza at Line #17 checks if the WSP file exists in the solution store. If so, it invokes SolutionRetractor.ps1 (which we'll discuss after Execadmsvcjobs.ps1). Then it executes the add and deploy commands so that, at this point of the script, we are guaranteed that the current version of the solution has been deployed to the farm. One quick note is on Line #40: we use the like operator and wildcard the URL because Get-SPWebApplication's URLs have required trailing slashes.

Execadmsvcjobs

Real quick, here's my PowerShell representation of the old aforementioned STSADM command:

Code Listing 41: Execadmsvcjobs.ps1

- #initialization

- param($timeout = 120)

- $ConfirmPreference = "None";

- #ensure sharepoint

- if((Get-PSSnapin Microsoft.Sharepoint.Powershell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapin Microsoft.SharePoint.Powershell;

- }

- #search for executing solution-based timer jobs

- while (($job = Get-SPTimerJob | where { ($_.Name -like "*solution-deployment*") -or ($_.Name -like "*solution-retraction*") }) -ne $null)

- {

- #found multiple jobs; wait for the first one

- if($job.Count -gt 1)

- {

- $job = $job[0];

- }

- #wait for job to finish

- $name = $job.Name

- Write-Host -NoNewLine ("Waiting for " + $name + " to finish");

- while ((Get-SPTimerJob $name) -ne $null)

- {

- #detect timeout

- if($timeout -le 0)

- {

- throw ("Timed out waiting for " + $name + " to complete.");

- }

- #keep waiting

- Write-Host -NoNewLine ".";

- Start-Sleep -Seconds 1;

- $timeout--;

- }

- #output status

- Write-Host;

- $job.HistoryEntries | foreach { Write-Host ("Job Status: " + $_.Status) }

- Write-Host;

- }

- #done

- Write-Host;

The only thing I want to mention is the "timeout" parameter (which defaults to 120 seconds). If a WSP deployment or retraction job hangs (which happens) this script would wait forever without the timeout. If you are on a slower development machine (or a VM) you might want to bump this up a bit a few minutes so you don't have to re-run your script and perform manual WSP removals in central administration if the job legitimately times out often.

Solution Retractor

The corollary to this script is of course SolutionRetractor.ps1, which does the opposite. I really like having this procedure broken out so that I can explicitly remove solutions if I need to re-baseline my environment after too many script errors or broken Structure deployments. It also comes in very handy in production when you need to keep the central admin solution store nice and tidy. Even though this code is so similar to SolutionDeployer.ps1, I'm going to be explicit and list it out:

Code Listing 42: SolutionRetractor.ps1

- #initialization

- param($url, $path = $(Split-Path -Parent $MyInvocation.MyCommand.Path), $wsp = $(Read-Host -prompt "WSP Filename"))

- $ConfirmPreference = "None";

- if ((Get-PSSnapin -Name Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapIn Microsoft.SharePoint.PowerShell;

- }

- #check if solution exists

- if ((Get-SPSolution | where { $_.Name -eq $wsp }) -ne $null)

- {

- #uninstall solution

- Write-Host;

- Write-Host ("Retracting " + $wsp + "...");

- #if a url was passed in, we need to force web app uninstall

- if ($url -ne $null)

- {

- #web app uninstall

- $app = Get-SPWebApplication | where { $_.Url -like $url + "*" };

- Uninstall-SPSolution -Identity $wsp -WebApplication $app;

- }

- else

- {

- #normal uninstall

- Uninstall-SPSolution -Identity $wsp;

- }

- #force job

- $script = Join-Path $path "\Execadmsvcjobs.ps1";

- .$script;

- #retract solution

- Write-Host ("Removing " + $wsp + "...");

- Remove-SPSolution -Identity $wsp -Force;

- }

- else

- {

- #not found

- Write-Host ($wsp + " was not found; skipping retraction.") -ForegroundColor Yellow;

- }

Site Warmer Upper

Getting the solution deployed is only half the battle. In order to ensure that new code (and, more importantly, updated code from future deployments) will have its latest version loaded by SharePoint, we need to induce a coma in our environment, and then defibrillate it back to life. A mere IISRESET isn't enough; we need to bring down and revive every aspect of SharePoint that might execute anything in our assemblies.

Accomplishing this is the other task of our site warmer upper script. Deployment doesn't just entail physically getting your files onto the server; it also requires that the target environment is configured so that it can properly host the code. Therefore, this script is right place to accomplish these tasks. With All Code, the code itself is the configuration; we just need to nudge SharePoint and tell it that there's new logic to execute.

So other than an IISRESET, there are a few other "nudging" actions this script performs:

- Cycle the SharePoint Timer Job

- Deploy PDB files to the GAC

- Warm up site

Once all of these tasks are executed, the site will be ready to go with the latest code. Let's take a look at the PowerShell. I'm going to present a simplified version of it here so that you can see the bones; you can then expand on it with the meat of your own requirements. Advanced forms of this script can do things like execute commands on remote servers (other WFEs (web front ends)), fire off any custom registration that needs to happen, send Email to administrators, etc.

Code Listing 43: SiteWarmerUpper.ps1

- #initialization

- param($url = $(Read-Host -prompt "Url"), $path = $(Split-Path -Parent $MyInvocation.MyCommand.Path))

- $ConfirmPreference = "None";

- #ensure sharepoint

- if((Get-PSSnapin Microsoft.Sharepoint.Powershell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapin Microsoft.SharePoint.Powershell;

- }

- #reset timer service

- Write-Host;

- Write-Host ("Cycling SharePoint Timer Service...");

- net stop SPTimerV4

- sleep 5

- net start SPTimerV4

- #reset iis

- Write-Host;

- Write-Host ("Resetting IIS...");

- iisreset /noforce

- iisreset /status

- sleep 15

- function GacPDB($pdb)

- {

- #load this dll from the gac via the pdb name, and get the actual folder it lives in

- $location = [System.Reflection.Assembly]::LoadWithPartialName($pdb.ToLowerInvariant().Replace(".pdb", [System.String]::Empty));

- if ($location -eq $null)

- {

- #dll not found

- Write-Host ("Warning: " + $pdb + " has not been GAC'd.") -ForegroundColor Yellow;

- }

- else

- {

- #get location

- $location = $location.Location.ToLowerInvariant();

- $location = $location.Substring(0, $location.LastIndexOf("\"));

- #copy the pdb to this location in the gac

- xcopy (Join-Path $path $pdb) ($location) /R /Y;

- }

- }

- #gac PDB's

- Write-Host;

- Write-Host "GACing PDB's...";

- dir | where { $_.Name -like "DDD*.pdb" } | foreach { GacPDB($_.Name); }

- #warmup site

- Write-Host;

- Write-Host ("Warming up " + $url + "...");

- $request = [System.Net.HttpWebRequest]::Create($url);

- $request.UseDefaultCredentials = "true";

- $request.Timeout = 150000;

- $response = $request.GetResponse();

Line #22 begins the definition of a function that GACs PDB files. Umm...what? The global assembly cache is nothing more than a folder that Windows wraps with a special shell to abstract its hierarchy. (And in fact, now in Server 2012, it's just a normal folder!) That's how we can have files with the same name but different version numbers. Since this shell only pertains to the UI, (Windows Explorer) we can copy files in and out via the command line no problem. By shoving our PDB files next to where they are stored in the innards of the GAC, we get line numbers in our exceptions' stack traces. Win.

So if the GAC is the brain that contains all of the code to power out SharePoint applications, think of this process as affixing anodes to its temples so we can get some more data about what goes wrong (therefore connoting unhandled exceptions to aneurisms). GacPDB takes in the file name of a PDB, slices off the extension, loads the super-secret GAC'd folder location from the assembly name, and nestles it next to the DLL. Note the logic on Line #43 only includes DLLs in the DDD namespace (replace with yours of course); we only want our application logic GAC'd.

Finally, we rapid-fire through the "second half" of the responsibilities of this script (that I mentioned above): GAC the PDB files, cycle the Timer Service, cycle IIS, and warm up the site. Things might get a bit wonky automating the solution deployed jobs as much as we are. So if the waiting portion of "Execadmsvcjobs" times out, or seems to hang, do a control+C to break out of the script, and clean things up manually in central admin.

[Note: This script doesn't make sense to run in the context of Visual Studio, since the Visual Studio 2012 integration takes care of the WSP deployment for us.]

Finally, this script comes in handy if you have multiple WFE's. You only need to perform the SharePoint operations once; the content database is updated centrally, and WSP deployment guarantees that all assets and web.config modifications will be pushed to all WFEs. However, we still need to GAC our PDBs and reset the services on each server so that they are all serving up the freshest content.

The Wrapper Scripts

Finally, I have some awkwardly-titled scripts (to fit the naming scheme) that are wrappers around other scripts. These don't actually do much themselves; they merely act as shortcuts to perform specific tasks in specific orders in specific contexts. Their contribution to the deployment cause is simply saving you mouse clicks in Visual Studio and keystrokes in PowerShell.

By "specific contexts," I mean that these types of scripts are only meant to be run in certain environments. For example, let's consider "RestterAndActivator.ps1" first. It is designed to be executed in an external PowerShell process directly from Visual Studio. We need to execute its constituent scripts (which of course are "SiteCollectionResetter.ps1" and "FeatureActivator.ps1") via a pretty nutty procedure I'll describe in the next section. "DoEverythinger.ps1" on the other hand is what we use to do a fresh deployment to a newly provisioned farm on the server. Therefore, it runs in the normal SharePoint PowerShell environment, where we can simply kick off scripts using the ".\" prefix.

Let's take a look:

Code Listing 44: ResetterAndActivator.ps1

- #initialization

- param($url = $(Read-Host -prompt "Url"), $path = $(Split-Path -Parent $MyInvocation.MyCommand.Path))

- #ensure sharepoint

- if ((Get-PSSnapin -Name Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapIn Microsoft.SharePoint.PowerShell;

- }

- #reset

- Write-Host;

- Write-Host ("Resetting Site Collection") -ForegroundColor Magenta;

- Write-Host;

- $script = Join-Path $path "\SiteCollectionResetter.ps1";

- .$script -url $url;

- #activate

- Write-Host;

- Write-Host ("Activating Features") -ForegroundColor Magenta;

- Write-Host;

- $script = Join-Path $path "\FeatureActivator.ps1";

- .$script -url $url;

Basically, this script only has enough logic to physically invoke its child scripts. You'll see an almost identical story in DoEverythinger.ps1 below, except the wrapped scripts are called slightly differently. Also, in Line #'s 11 and 17, you'll see that I use a different color for my output. When you have scripts calling scripts, it's helpful to use techniques like this to know who is actually doing the work, especially when an error is thrown and you need to hunt for its cause.

In DoEverythinger.ps1, we "hard code" the WSPs so that we can specify the order in which they are deployed, and control if the URL parameter should be passed down to the SolutionDeployer.ps1 calls to deploy to a particular web application. I have played with making multi-WSP support more dynamic, but it's honestly better to just spell out the order so that everyone clearly see what the script is doing.

Code Listing 45: DoEverythinger.ps1

- #initialization

- param($siteUrl = $(Read-Host -prompt "Url"), $path = $(Split-Path -Parent $MyInvocation.MyCommand.Path))

- #ensure sharepoint

- if ((Get-PSSnapin -Name Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapIn Microsoft.SharePoint.PowerShell;

- }

- #deploy

- Write-Host;

- Write-Host ("Deploying Solutions") -ForegroundColor Magenta;

- $script = Join-Path $path "\SolutionDeployer.ps1";

- .$script -wsp DDD.Web.wsp;

- $script = Join-Path $path "\SolutionDeployer.ps1";

- .$script -wsp DDD.WebParts.wsp -url $siteUrl;

- #reset

- Write-Host;

- Write-Host ("Resetting Site Collection") -ForegroundColor Magenta;

- Write-Host;

- $script = Join-Path $path "\SiteCollectionResetter.ps1";

- .$script -url $siteUrl;

- #activate

- Write-Host;

- Write-Host ("Activating Features") -ForegroundColor Magenta;

- Write-Host;

- $script = Join-Path $path "\FeatureActivator.ps1";

- .$script -url $siteUrl;

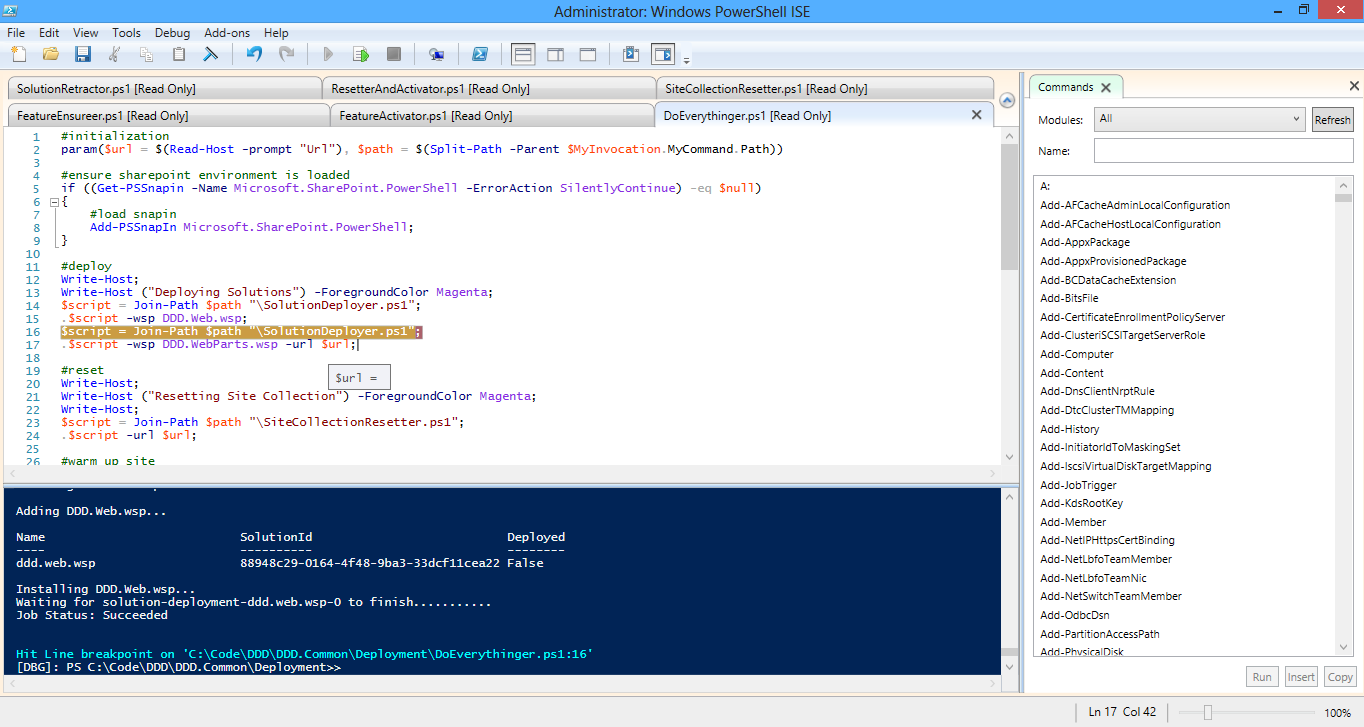

I want to point out a hard PowerShell lesson I had to learn. Look at Line #2: the name of the URL parameter is actually "siteUrl" when it's been just "url" in every other script? For someone who mentions OCD all the time, why would I suddenly go rouge with my variable naming? Because this is me leaning the aforementioned PowerShell lesson – about variable scopes. Look at the following screen shot, when I was using the "url" parameter in a previous version of DoEverythinger:

Notice that that value of our url variable is blank (essentially null). How is this so, when the script's param signature explicitly forces this variable to have a value? Line #15 is the cuprite here. How so? It doesn't have anything to do with the url variable! Exactly. Recall our discussion about web-scoped resources in WSPs, and how our SolutionDeployer script controls this by making the url parameter optional.

Since DDD.Web.wsp doesn't have any such resources and should be deployed globally, we implicitly pass a null in for the optional url parameter by passing in nothing at all. Here's where the lesson comes in: when a parent script calls a child script, its variables (by default) are in scope. The interpreter is basically flattening all scripts into one, even if they are invoked in separate processes.

I tried to fix this in several different "PowerShelly" ways:

- Set-Variable -Name url -Scope 0;

- $url = $url.clone();

- param($local:url =...

None of these worked; passing a null for url to a child script wiped out its value back up in the parent wrapper script, because it's technically the same reference. (Pointer fail.) I think the ultimate problem is the fact that I was using the same variable name "url" in all my scripts. I wanted to fix this the proper PowerShell way so that I didn't have to revisit every script and change variable names, or put some sort of dependency or convention on my PowerShell in general. We should be able to plug in any child script and have it just work. It turns out the easiest way to deal with this is to continue treating my child scripts as static helper methods, and tweaking my wrapper scripts to make them happy. So "siteUrl" it is.

I need to point something else out in general: there's been an elephant in the room regarding our wrapper scripts. I'm referring to the infrastructure needed to implement multi-process PowerShell scripts, which is essentially a parent script that spawns other PowerShell instances to execute child scripts. The biggest gotcha with PowerShell is that a running instance will cache assemblies on you if you leave it open across development deployments.

This will put you in the same predicament as, for example, deploying a timer job update and forgetting to cycle the timer service. You'll be wondering why your new code isn't working...for hours...until you finally realize that your new code isn't running. During your script deployment, get into the habit of closing and opening new PowerShell windows (even though it takes a few obnoxious seconds to reload the SharePoint 2013 Management Shell) the same way we all did performing IISRESETs when GACing new SharePoint code.

The way I deal with this (other than adding the text "Close and re-open PowerShell" to my build documentation in several different places) is to have all my "parent" scripts invoke their "child" scripts in new PowerShell processes. This ensures that the new instance will suck in all the freshest files and DLLs from the GAC. This way, if your code doesn't work, you know it's because of a bug and not a deployment limbo.

The first thing you've probably noticed is that these wrappers use the dot (".") notation in the invocations of their constituent scripts. Take a look at Line #'s 13 and 14 in ResetterAndActivator.ps1 for example. All you have to do is add a period in front of the full path to the script, and it will be invoked in its own instance. Yet another limbo avoided!

Next, we need to make the children "aware" that they might be called in their own process. Add the following code to the top of any script that could be called in this manner (which should be all of them, just in case; it does add a few seconds or more to script execution time, so if you're sure you won't need to, you can skip it and recoup that time). This imitates what the SharePoint 2013 Management Shell loads on top of standard PowerShell: The DLLs of the API and all those wonderful SharePoint commandlets. Otherwise, you'll be launching these scripts without the context of SharePoint and getting nothing but red ink.

Code Listing 46: <anything>.ps1

- #ensure sharepoint

- if ((Get-PSSnapin -Name Microsoft.SharePoint.PowerShell -ErrorAction SilentlyContinue) -eq $null)

- {

- #load snapin

- Add-PSSnapIn Microsoft.SharePoint.PowerShell;

- }

This is a general best practice that I like to keep among my good habits. In the next section, as an advanced topic, I'll demonstrate a way to kick off PowerShell scripts directly from Visual Studio without having to worry about this step. It's important to know how this stuff works; it's ultimately more important to get your work done faster. Additionally, I don't like the idea of having to add conventions like this to my scripts (or to anything). So if I can get around it with some slick refactoring or a different approach, I absolutely will!